Metadata

- Author: parlant.io

- Full Title: Parlant vs LangGraph

- URL: https://www.parlant.io/blog/parlant-vs-langgraph/?li_fat_id=8a23a7ba-7ca9-4df8-807d-cfc05aac968a

Highlights

- In this post, we’ll demonstrate how router-based architectures inherently fail for natural, free-form conversation—not due to implementation details you can fix, but due to fundamental architectural constraints. Understanding these limitations now can save you from a complete rewrite later when you discover your graph-based system can’t scale to handle the way users actually converse. (View Highlight)

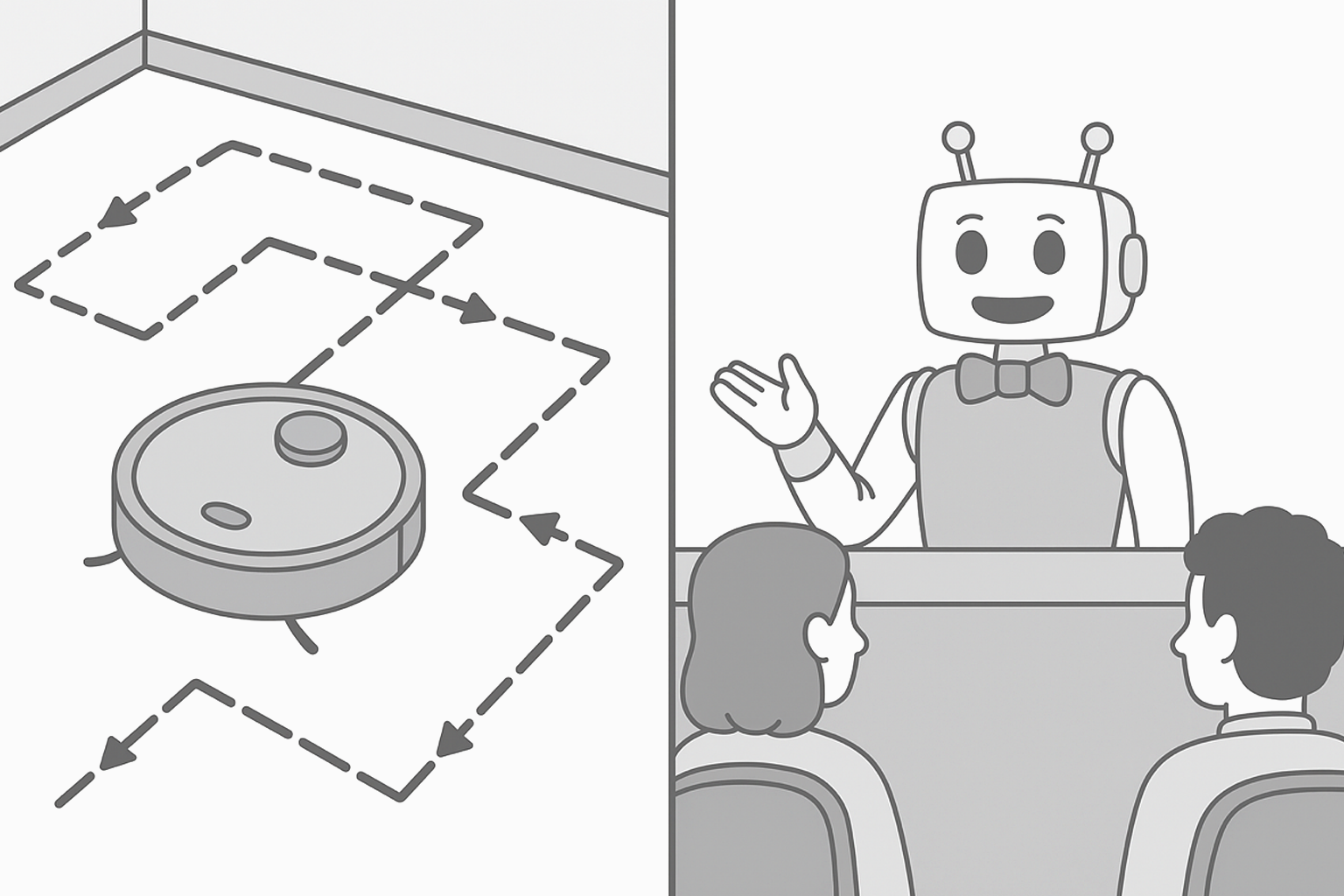

- LangGraph is a framework for building agentic workflows through explicit graph-based orchestration. It excels at task decomposition, multi-step automation, and scenarios where you need precise control over execution flow. (View Highlight)

- Parlant is an AI alignment engine designed specifically for natural customer-facing dialogue. It’s built to handle free-form conversations where users don’t follow scripts: they mix topics freely, and expect coherent responses across multiple contexts simultaneously. (View Highlight)

- LangGraph represents agentic applications as graphs where nodes are computational steps (LLM calls, tool invocations) and edges define control flow. This architecture is powerful for workflow automation and task orchestration. (View Highlight)

- A central coordinator (supervisor) routes requests to specialized agents based on the query type. Each specialized agent has its own system prompt optimized for a specific domain. (View Highlight)

- 2. Multi-Agent Network Agents communicate in a many-to-many pattern, where each agent can invoke others as needed. This provides flexibility but requires careful coordination logic. (View Highlight)

- 3. Hierarchical Teams Supervisor agents manage their own teams of specialized agents, creating nested structures for complex workflows (View Highlight)

- LangGraph is indeed excellent for: • Workflow automation and orchestration • Task decomposition into specialized subtasks • Non-conversational agent applications • Scenarios requiring a stencil control flow (View Highlight)

- Here’s where things get interesting for conversational AI. The router pattern (i.e., “supervisor”), where specialized nodes own specific tasks and topics, works beautifully for workflow orchestration but creates inherent challenges when users engage in natural, free-form dialogue (View Highlight)

- Isolated Specialization Is Inherently Broken

Picture this. A customer sends a message:

“Hey, I need to return this laptop. Also, what’s your warranty on replacements?” In a router system, that message gets routed to one specialized agent. (View Highlight)

- Let’s say it lands on the Returns Agent. This agent has a system prompt optimized for returns. It knows the return policy inside and out. But what about warranties? That’s the Warranty Agent’s domain. The Returns Agent is now faced with four practical “alternatives” for handling the warranty question, none of which are actually any good: (View Highlight)

- Option 1: Ignore the warranty question

“Sure, I can help you with that return. What’s your order number?” ↪ Poor UX. The customer explicitly asked about warranties and got no acknowledgment. This is not how a good conversational framework should behave. (View Highlight)

- Option 2: Acknowledge the limitation

“I can help with the return. Regarding the warranty on replacements, I’m not certain about those details.” ↪ This is not only awkward, but, from the overall agent’s perspective, is incorrect. The overall agent can handle warranty questions—it just routed to the wrong specialized node. This will frustrate both users and developers, and lead to a disjointed user experience. This, too, is not how a good conversational framework should behave. (View Highlight)

- Option 3: Hallucinate an answer

“Sure! Our return policy is 30 days, and all replacements come with a 5-year warranty.” ↪ Dangerous and extremely common—as well as hard to prevent with LLMs. The Returns Agent isn’t grounded in warranty information, but, being an LLM, will often attempt to fabricate an answer. That “5-year warranty” might be completely wrong. (View Highlight)

- Option 4: Stack the topics

“Let me help you with that return first, and then we’ll get to your warranty question.” ↪ This is actually (kind of?) reasonable. The agent acknowledges both questions and proposes handling them sequentially. Many LangGraph implementations do exactly this. But even option 4 is not how a good conversational framework should behave. It still smells of a fundamental design flaw, because it often leads to poor user experience. (View Highlight)

- The First Common Issue: Intertwined Topics Many natural conversations don’t present as “two separate questions.” The “topics” (from a backend classification perspective) are naturally intertwined throughout the interaction. Forcing an artificial separation feels robotic. (View Highlight)

-

“I bought this laptop for my business but it’s broken. Can I return it and get a tax invoice for the replacement?” The return process and the business tax documentation question aren’t separate topics the user is stacking. They’re deeply connected: • The tax invoice depends on whether a return is processed • Business customers may have different return policies • The replacement timing affects when documentation is issued Forcing the agent to say “Let me handle your return first, then we’ll discuss invoicing” creates unnecessary friction and invites avoidable questions and objections. In natural conversation, you’d expect both aspects to be addressed together: “Absolutely, we can process that return. For your replacement, I’ll ensure you receive a proper tax invoice. Let me pull up your account—is the business registered under the same email?” (View Highlight)

- The Second Common Issue: Recurring Topics Another pattern that emerges frequently in natural conversation: topics don’t just appear once and disappear. Users jump between topics, then circle back. And once multiple topics have entered the conversation, they tend to remain relevant—at least to some degree—for the rest of the discussion. (View Highlight)

- Consider this conversation flow:

Customer: “I’d like to return this laptop I bought last week.”

Agent (Returns): “I can help with that. Can you provide your order number?”

Customer: “Yeah let me get it, one sec. By the way, do you have the new model in stock? The one with the better battery?”

Agent (Inventory): “Yes, we have the XPS 15 with extended battery in stock. Would you like me to reserve one for you?”

Customer: “Yes, perfect. So if I return this one and get that new model, will I need to pay the difference, or can I just exchange them?” Now the conversation needs to reference both the return process AND the inventory/pricing information. The customer isn’t asking two separate questions—they’re asking about the relationship between the return and the new purchase. (View Highlight)

- The challenge is that once both topics have been introduced, the rest of the conversation will, in high likelihood, need to reference both in some form or another. The user isn’t thinking “Now I’m in Returns mode” or “Now I’m in Inventory mode”. They’re thinking about their situation holistically, which spans both domains. Every subsequent message will be handled by one specialized node that’s ungrounded with respect to the other relevant topic. The conversation’s integrity and coherence fragments precisely when it needs to be most cohesive. A conversational AI framework that’s understands natural conversations should support seamless context switching and multi-topic awareness, and it’s exactly one of the design goals Parlant was deliberately designed to achieve. (View Highlight)

- How Parlant Approaches Free-Form Conversation We created Parlant from a clear vision of, and frustration from these age-old problems in mechanistic AI conversations. We thus decided to take a fundamentally different approach optimized for natural dialogue: dynamic assembly of a comprehensive context instead of artificially segmented routing. (View Highlight)

- Dynamic Guideline Matching Rather than routing messages to specialized nodes, Parlant evaluates ALL of your guidelines and other contextual information, and loads only the relevant ones for each conversation turn—i.e., for each user-observable LLM request. (View Highlight)

- Notice what’s different here: there’s no routing decision. When the customer says, “I need to return this laptop for my business and get a tax invoice for the replacement,” Parlant’s guideline matcher evaluates all guidelines and determines that three are contextually relevant: (View Highlight)

-

- The return guideline (customer wants to return)

- The tax invoice guideline (business purchase context)

- The warranty guideline (mentioned replacement) They get loaded into the LLM’s context simultaneously. The agent composes a response that’s dynamically grounded in all of them:

“I’d be happy to help you with that return and ensure you get proper documentation. Let me pull up your order—could you provide the order number? Once I have that, I’ll process your return and make sure your replacement comes with a tax invoice for your business records. Just to confirm, the business is registered under the same email as this order?” So, instead of choosing between domains, the Parlant agent assembles all the relevant grounding context on-demand. (View Highlight)

- Why This Works for Conversational Coherence More often than not, real-world customer conversations break your textbook vision of an architected dialogue. Parlant’s approach solves the problems we identified earlier: No artificial separation: The agent can naturally address intertwined topics because it has access to all relevant guidelines simultaneously. No crude serialization: When both topics have brief answers, the agent can address them together naturally. No context fragmentation: There’s no handoff between agents because there aren’t separate agents. There’s just one agent with dynamically and continuously assembled context. Consistent grounding: The agent is grounded in all contextually relevant information, not just one domain’s specialization. This is (one) big part of what makes Parlant so seamlessly effective at maintaining compliance and avoiding hallucinations in customer-facing scenarios. (View Highlight)

- How Parlant Keeps Accuracy Scalable Of course, if you just load all relevant guidelines, the LLM’s context gets bloated. This is the same as putting everything in one giant system prompt, which leads to the “curse of instructions”—too many simultaneous instructions degrade performance (not to mention you’ll ironically end up paying more for this degraded performance, due to the higher token count). (View Highlight)

- This is where Parlant’s architecture does most of its work. The framework uses surprisingly intricate and nuanced guideline matching to accurately determine relevance BEFORE loading context. (View Highlight)

- When LangGraph Works Well for Conversational AI I want to be clear: LangGraph isn’t wrong for all conversational AI. If you have the right use case for it, you can achieve better results with it than with Parlant. (View Highlight)

- The conversation is narrow in scope, and streamlined: You’re interviewing users through a specific process like onboarding, data collection, or a structured troubleshooting flow, where they have to choose their responses from options presented to then, rather than responding freely. (View Highlight)

- Users follow a guided path: The interaction model strictly requires users to answer questions in a formal sequence rather than conversing freely. (View Highlight)

- Limited conversational freedom: Users aren’t expected to interrupt with unexpected questions mid-flow, change topics independently, or reference multiple contexts simultaneously. (View Highlight)

- Clear state transitions: The conversation has well-defined stages where it makes sense to “route” to the next step. (View Highlight)

- Granted, these are legitimate use cases where LangGraph is likely to provide great results with far less compute overhead than Parlant invests in (an investment made to maximize accuracy and user experience in genuine conversational settings). The key distinction is in the interaction model. If your users are expected to follow a strict script at all times, LangGraph’s routing works great and is not likely to run into unwieldy design issues and operational faults. If your users are conversing naturally and freely, that’s where the challenges emerge. That’s where Parlant’s shines, as it was intricately designed for this use case. (View Highlight)

- Using LangGraph and Parlant Together Here’s something interesting: these frameworks aren’t competitive—they’re complementary. LangGraph can work as a lower-level orchestration tool within Parlant. (View Highlight)

- In this pattern: • Parlant handles and synthesizes the conversational flow, dynamic context management, and natural dialogue • LangGraph handles the complex internal KB workflow (retrieval, re-ranking, synthesis) (View Highlight)

- This combines the strengths of both: • Parlant ensures coherent, well-guided, natural conversation • LangGraph provides powerful workflow orchestration for agentic processing (View Highlight)

- LangGraph: Explicit Graph-Based Control Flow LangGraph represents your application as a graph with explicit nodes and edges. You define the routing logic, specify transitions, and control execution flow. This is excellent for: • Workflow automation • Task decomposition • Multi-step processes with clear stages • Scenarios requiring precise control over execution order It’s great for when you know what the expect from the input—which is simply not the case for raw conversational content. (View Highlight)

- Parlant: Dynamic Context Assembly That’s where Parlant helps you keep the contracts under control, and handle the messy conversational parts cleanly and effectively. Parlant represents your application as a set of conditional guidelines and journeys within a natural conversation. Instead of routing between specialized nodes, it dynamically assembles relevant context for each turn. This is excellent for: • Free-form conversational AI • Natural dialogue where users don’t follow scripts • Scenarios where topics interweave unpredictably • Compliance-critical customer-facing applications (View Highlight)

- Use LangGraph when you need explicit orchestration and precise control over execution: workflow automation like multi-step tasks, data processing pipelines, and agent workflows where order matters; task decomposition that breaks complex problems into coordinated subtasks or multi-agent collaboration for non-conversational workloads; narrow, guided conversational flows such as structured onboarding, step-by-step data collection, or troubleshooting wizards. (View Highlight)

- Use Parlant when you’re building free‑form, customer‑facing agents where users converse naturally without following a script: support chatbots, sales and consultation assistants, or any scenario that demands comprehensive and consistent domain alignment. It shines when conversations mix topics, include interruptions, and require natural back‑and‑forth; in compliance‑critical settings where regulated behavior and avoidance of unauthorized statements are essential across business‑critical interactions; and in multi‑context dialogues where users reference multiple topics simultaneously, concerns are intertwined rather than separable, and the agent must produce coherent, cross‑topic responses. (View Highlight)

- When you need both conversational fluidity and complex workflows, use both: • Conversational agent (Parlant) with complex retrieval (LangGraph) • Natural dialogue requiring multi-step tool orchestration • Customer-facing AI with sophisticated backend processes (View Highlight)

- When we started building Parlant, we thought dynamic context assembly would be straightforward. Match relevant guidelines, load them into context, done. We quickly learned that the complexity goes much deeper than initially apparent. (View Highlight)

- Guideline Matching Complexity: • Semantic matching isn’t just about accuracy (though that alone is a massive challenge—just look at how many specialized guideline sub-matchers exist in Parlant) • You need to handle edge cases: partially relevant guidelines, conflicting conditions, different temporal scopes, and keeping track of what was already done and wasn’t to avoid over-conformance to instructions • False positives and negatives create different failure modes that need to be mitigated in how you think about your agent’s design (which Parlant addresses through its conversation-optimized tool-calling and canned response systems) (View Highlight)